Artificial intelligence (AI): What is it?

The ability of a machine to carry out cognitive tasks including perceiving, learning, thinking, and problem-solving is known as artificial intelligence, or AI. The bar for AI is set at the level of human reasoning, speech, and vision teams.

The following AI fundamentals will be taught to you in this blog:

- What is AI?

- Introduction to levels of artificial intelligence

- History of artificial intelligence

- The goals of artificial intelligence

- Subfields of artificial intelligence

- Types of artificial intelligence

- AI versus machine learning

- Where is AI used? Examples

- Why is AI on the rise now?

Introduction to Artificial Intelligence Levels

Nowadays, AI is employed in practically every industry, offering businesses that adopt it widely a technological advantage. In comparison to previous analytics methods, AI has the ability to add 50% more incremental value to the banking industry and 600 billion dollars of value to the retail sector. The potential income increase in logistics and transportation is 89% higher.

Concretely, automating tedious and repetitive duties is possible if a firm uses AI for its marketing staff. This frees up the sales representative to concentrate on relationship-building, lead nurturing, etc. A corporation by the name of Gong offers a service for conversation intelligence. The computer records, transcripts, and analyses every phone call a sales representative makes. The VP can develop a successful plan using AI analytics and recommendations.

To put it briefly, AI offers state-of-the-art technology to handle complex data that a person cannot handle. AI automates repetitive chores so a worker can concentrate on high-level, value-added tasks. AI implementation at scale results in lower costs and higher income.

History of Artificial Intelligence

The term “artificial intelligence” is popular right now, although it’s nothing new. A summer project on artificial intelligence was decided to be organised in 1956 by avant-garde professionals from various backgrounds. John McCarthy (Dartmouth College), Marvin Minsky (Harvard University), Nathaniel Rochester (IBM), and Claude Shannon were the project’s four capable leaders (Bell Telephone Laboratories).

A brief history of artificial intelligence is provided below:

| Year | Milestone / Innovation |

|---|

- 1923: The term “robot” was first used in English in one of Karel Apek’s plays, “Rossum’s Universal Robots.

- 1943: The first neural network was built in 1943.

- 1945: The term “robotics” was coined by Columbia University alumnus Isaac Asimov.

- 1956: The phrase “artificial intelligence” was first coined by John McCarthy. At Carnegie Mellon University, the first active AI programme was demonstrated.

- 1964: In his dissertation at MIT, Danny Bobrow demonstrated how computers could comprehend natural language.

- 1969: Shakey was created in 1969 by researchers at Stanford Research Institute. a problem-solving and locomotion-capable robot.

- 1979: The Stanford Cart was the first autonomous vehicle to be computer-controlled.

- 1990: Important machine learning demonstrations

- 1997: Garry Kasparov, the reigning world chess champion, was defeated by the Deep Blue Chess Program.

- 2000: Commercially available interactive robot pets are now available. Kismet, a robot with an expressive face, is on display at MIT.

- 2006: AI first entered the business world in 2006. AI has being used by businesses including Facebook, Netflix, and Twitter.

- 2012: In 2012, Google introduced the “Google now” function for Android apps, which offers the user a prediction.

- 2018: The IBM “Project Debater” excelled in a discussion on difficult subjects with two expert debaters.

Artificial intelligence’s objectives

These are AI’s primary objectives:

- It enables you to shorten the time required to complete particular tasks.

- facilitating communication between people and machines.

- facilitating more efficient and natural human-computer communication.

- enhancing the precision and swiftness of medical diagnostics.

- accelerating the process of learning new knowledge.

- improving the flow of information between machines and people.

Artificial intelligence subfields

Here are some significant artificial intelligence subfields:

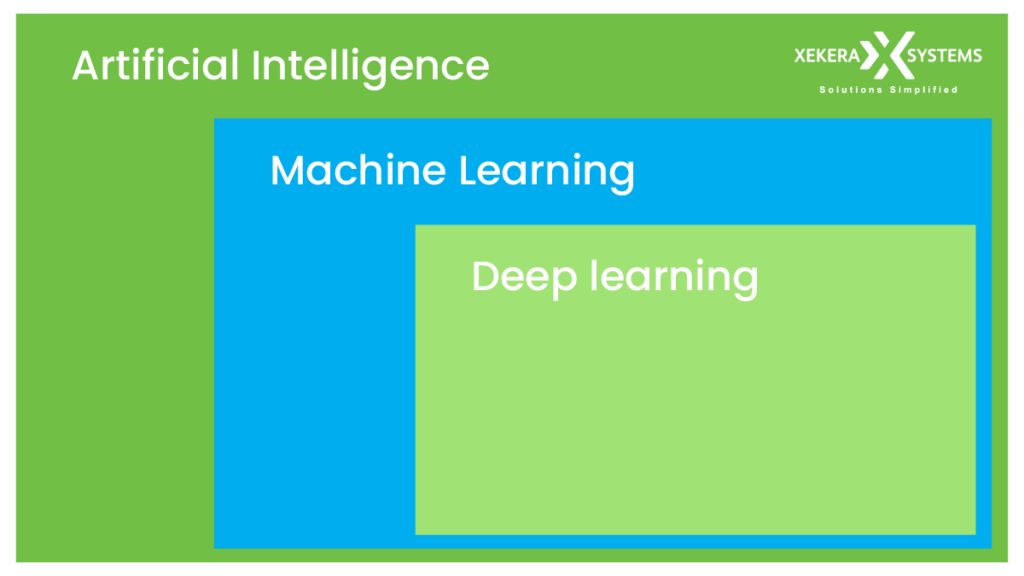

Machine Learning:

The study of algorithms that learn from examples and experiences is known as machine learning. The foundation of machine learning is the notion that certain patterns in the data have been found and applied to make future predictions. The system learns to find these rules, which is different from hardcoding rules.

Deep Learning:

A branch of computer learning, deep learning. Deep learning does not imply that the computer learns more intricate information; rather, it uses multiple layers to extract knowledge from the input. The number of layers in the model serves as a measure of the depth of the model. For instance, the 22 layers in the Google LeNet model for image identification.

Natural Language Processing:

A neural network is a collection of connected I/O units where each connection has a weight corresponding to one of its computer programmes. You can use big databases to develop predictive models. The human nervous system serves as a foundation for this model. This approach can be used for computer speech, human learning, picture understanding, and other tasks.

Expert Systems:

An expert system is an interactive, trustworthy computer-based decision-making system that employs heuristics and facts to address challenging decision-making issues. It is also thought to represent the pinnacle of human intelligence. An expert system’s primary objective is to resolve the trickiest problems in a certain field.

Fuzzy logic:

Fuzzy logic is a many-valued logic form that allows variables’ truth values to be any real number between 0 and 1. It is a general term for the idea of incomplete truth. In real life, there may be instances where we are unable to determine whether a statement is true or false.

Artificial intelligence (AI) Types

Artificial intelligence can be divided into three categories: rule-based, decision tree, and neural networks.

- Narrow AI is a subset of AI that gives you intelligent assistance with a specific task.

- A sort of AI intelligence known as general AI is capable of handling any intellectual work effectively, much like a human.

- An input data set is applied to a set of predetermined rules to create rule-based artificial intelligence. The system then generates an output in line with that.

- In that it uses sets of pre-established rules to generate judgments, decision tree AI is akin to rule-based AI. To take into account more alternatives, the decision tree additionally allows for branching and looping.

- Super AI is a subset of AI that enables machines to comprehend human language and react naturally.

- Robot intelligence is a subset of AI that gives machines the capacity for sophisticated cognitive functions like planning, thinking, and learning.

AI Vs Machine Learning

The majority of our daily electronics, including smartphones and the internet, involve artificial intelligence. Big businesses that wish to highlight their most recent breakthrough frequently use the terms artificial intelligence and machine learning interchangeably. AI and machine learning, however, differ in a few respects.

Artificial intelligence, or AI, is the study of teaching machines to carry out human functions. Scientists started investigating how computers could solve issues on their own in the 1950s, which led to the invention of the word.

A computer that has been given human-like traits is said to have artificial intelligence. Take our brain, which calculates the environment around us with ease and efficiency. The idea behind artificial intelligence is that a machine can perform similar tasks. One may say that AI is a broad field of study that imitates human abilities.

A unique branch of AI called machine learning teaches a machine how to learn. Machine learning algorithms attempt to draw conclusions from data by looking for patterns. In other words, the machine does not require explicit human programming. The computer will learn what to do from the examples provided by the programmers.

Where is AI used? Examples

Currently, we will learn about a variety of AI applications in this lesson for beginners:

AI has many different uses.

- Repetitive jobs are reduced or avoided with the help of artificial intelligence. AI, for example, can perform a task continually without getting tired. AI is always active and unconcerned with the task at hand.

- An established product is improved using artificial intelligence. Core goods were constructed using hard-coded rules prior to the advent of machine learning. Instead of beginning from scratch to build new products, businesses have utilised artificial intelligence to improve the functionality of the product. You may have a Facebook image in mind. A few years ago, you needed to manually tag your pals. Nowadays, Facebook will recommend a friend to you with the aid of AI.

All sectors of the economy, including marketing, supply chains, finance, and the food processing industry, use AI. Financial services and high-tech communication are leading the AI fields, according to a McKinsey assessment.

Why is AI booming now?

Now in this Artificial Intelligence testing tutorial.

A neural network has been out since the nineties with the seminal study of Yann LeCun. But it just started to gain popularity around the year 2012. Three important aspects that contribute to its popularity are as follows:

- Hardware

- Data

- Algorithm

Since machine learning is an experimental science, it requires data to test novel theories or methods. Data became easier to access as the internet expanded. In addition, major corporations like NVIDIA and AMD have created powerful graphics chips for the gaming industry.

Hardware

The power of the CPU has increased dramatically over the past twenty years, making it possible to train a tiny deep-learning model on any laptop. To process a deep-learning model for computer vision or deep learning, however, you need a more potent system. Graphical processing units (GPUs) of a new generation are now accessible as a result of the investments made by NVIDIA and AMD. These chips provide parallel computations, and to speed up the calculations, the computer can distribute the computations among multiple GPUs.

For instance, training a model called ImageNet on an NVIDIA TITAN X takes just two days as opposed to weeks on a conventional CPU. Large corporations also employ GPU clusters to train deep learning models with the NVIDIA Tesla K80 since it lowers data centre costs and improves performance.

Data

The model’s structure comes from deep learning, and its lifeblood is data. Artificial intelligence is powered by data. Nothing can be done without data. It is now simpler than ever to store a large amount of data in a data centre thanks to recent technological advancements in data storage.

Machine learning algorithms can now be fed data collecting and distribution thanks to the internet revolution. If you are familiar with Flickr, Instagram, or any other image-based software, you can infer their potential for artificial intelligence. On these websites, there are millions of images with tags. Without having to manually collect and classify the data, these images can train a neural network model to recognise an object on the image.

The new gold is artificial intelligence mixed with data. No company should overlook data as a distinct competitive advantage, and AI offers the greatest insights from your data. The company that has data will have a competitive advantage when all businesses can use the same technologies. To give you an idea, every day the world produces roughly 2.2 exabytes, or 2.2 billion gigabytes.

To discover trends and learn in a significant volume, an organisation requires data sources that are incredibly different.

Algorithm

Although data is freely accessible and hardware is more potent than ever, the creation of more precise algorithms is one factor that increases the neural network’s dependability. Primary neural networks lack complex statistical features and are simply multiplication matrices. To enhance the neural network, notable breakthroughs have been made since 2010.

A progressive learning algorithm is used in artificial intelligence to let the data perform the programming. It implies that a machine is capable of learning new skills, such as how to become a chatbot or identify abnormalities.

Summary

Artificial intelligence, also known as AI, is the study of teaching machines to mimic or perform human functions.

To teach a machine, a scientist can employ a variety of techniques. In the early eras of AI, programmers created hard-coded programmes that included every conceivable logical scenario and the appropriate response for the machine.

The management of the rules becomes challenging as a system becomes more sophisticated. To get over this problem, the computer can use data to teach itself how to handle every circumstance from a certain environment.

The most crucial component of creating a powerful AI is having ample data that is highly heterogeneous. For instance, if there are enough words available, a machine can learn other languages.

The newest cutting-edge technology is AI. Venture capitalists pour billions of dollars into new businesses or AI initiatives, and according to McKinsey, AI can increase every industry at least double-digit rates.

Artificial intelligence comes in a variety of forms, including Super AI, General AI, and AI based on rules.